Monte-Carlo Prediction

1. Model-Free

이전 챕터에서 Dynamic Programming에 대해서 배워보았습니다. Dynamic programming은 Bellman Equation을 통해서 optimal한 해를 찾아내는 방법으로서 MDP에 대한 모든 정보를 가진 상태에서 문제를 풀어나가는 방법을 이야기합니다.

특히 Environment의 model인 "Reward function"과 "state transition probabilities"를 알아야하기 때문에 Model-based한 방법이라고 할 수 있습니다. 이러한 방법에는 아래과 같은 문제점이 있습니다.

(1) Full-width Backup --> expensive computation (2) Full knowledge about Environment

이러한 방식으로는 바둑같은 경우의 수가 많은 문제를 풀 수가 없고 실재 세상에 적용시킬 수가 없습니다. 사실 위와 같이 학문적으로 접근하지 않더라도 이러한 방법이 사람이 배우는 방법과 많이 다르다는 것을 알 수 있습니다. 이전에도 언급했었지만 사람은 모든 것을 다 안 후에 움직이지 않습니다. 만져보면서, 밟아보면서 조금씩 배워나갑니다. 이전에도 말했듯이 Trial and error를 통해서 학습하는 것이 강화학습의 큰 특징입니다.

따라서 DP처럼 full-width backup을 하는 것이 아니라 실재로 경험한 정보들로서 update를 하는 sample backup을 하게 됩니다. 이렇게 실재로 경험한 정보들을 사용함으로서 처음부터 environment에 대해서 모든 것을 알 필요가 없습니다. Environment의 model을 모르고 학습하기 때문에 Model-free라는 말이 붙게 됩니다.

현재의 policy를 바탕으로 움직여보면서 sampling을 통해 value function을 update하는 것을 model-free prediction이라 하고 policy를 update까지 하게 된다면 model-free control이라고 합니다.

이렇게 Sampling을 통해서 학습하는 model-free 방법에는 다음 두 가지가 있습니다.

(1) Monte-Carlo (2) Temporal Difference

Monte-Carlo는 episode마다 update하는 방법이고 Temporal Difference는 time step마다 update하는 방법입니다. 이번 chapter에서는 Monte-Carlo Learning을 살펴보도록 하겠습니다.

2. Monte-Carlo

Monte-Carlo라는 말에 대해서 Sutton 교수님은 책에서 다음과 같이 이야기합니다.

The term "Monte Carlo" is often used more broadly for any estimation method whose operation involves a significant random component. Here we use it specifically for methods based on averaging complete returns

Monte-Carlo 단어 자체는 무엇인가를 random하게 측정하는 것을 뜻하는 말이라고 합니다. 강화학습에서는 "averaging complete returns"하는 방법을 의미한다고 합니다. 이것은 무엇을 의미할까요?

Monte-Carlo와 Temporal Difference로 갈리는 것은 value function을 estimation하는 방법에 따라서 입니다. value function이라는 것은 expected accumulative future reward로서 지금 이 state에서 시작해서 미래까지 받을 기대되는 reward의 총합입니다. 이것을 DP가 아니라면 어떻게 측정할 수 있을까요?

가장 기본적인 생각은 episode를 끝까지 가본 후에 받은 reward들로 각 state의 value function들을 거꾸로 계산해보는 것입니다. 따라서 MC(Monte-Carlo)는 끝나지 않는 episode에서는 사용할 수 없는 방법입니다. initial state S1에서부터 시작해서 terminal state St까지 현재 policy를 따라서 움직이게 된다면 한 time step마다 reward를 받게 될 텐데 그 reward들을 기억해두었다가 St가 되면 뒤돌아보면서 각 state의 value function을 계산하게 됩니다. 아래 recall that the return이라고 되어있는데 제가 말한 것과 같은 말입니다. 순간 순간 받았던 reward들을 시간 순서대로 discount시켜서 sample return을 구할 수 있습니다.

3. First-Visit MC vs Every-Visit MC

위에서는 한 에피소드가 끝나면 어떻게 하는 지에 대해서 말했습니다. 하지만 multiple episode를 진행할 경우에는 한 episode마다 얻었던 return을 어떻게 계산해야할까요? MC에서는 단순히 평균을 취해줍니다. 한 episode에서 어떤 state에 대해 return을 계산해놨는데 다른 episode에서도 그 state를 지나가서 다시 새로운 return을 얻었을 경우에 그 두개의 return을 평균을 취해주는 것이고 그 return들이 쌓이면 쌓일수록 true value function에 가까워지게 됩니다.

한 가지 고민해야할 점이 있습니다. 만약에 한 episode내에서 어떠한 state를 두 번 방문한다면 어떻게 해야할까요? 이 때 어떻게 하냐에 따라서 두 가지로 나뉘게 됩니다.

First-visit Monte-Carlo Policy evaluation

Every-visit Monte-Carlo Policy evaluation

말 그대로 First-visit은 처음 방문한 state만 인정하는 것이고(두 번째 그 state 방문에 대해서는 return을 계산하지 않는) every-visit은 방문할 때마다 따로 따로 return을 계산하는 방법입니다. 두 방법은 모두 무한대로 갔을 때 true value function으로 수렴합니다. 하지만 First-visit이 좀 더 널리 오랫동안 연구되어 왔으므로 여기서는 First-visit MC에 대해서 다루도록 하겠습니다. 아래는 First-Visit Monte-Carlo Policy Evaluation에 대한 Silver 교수님 수업의 자료입니다.

4. Incremental Mean

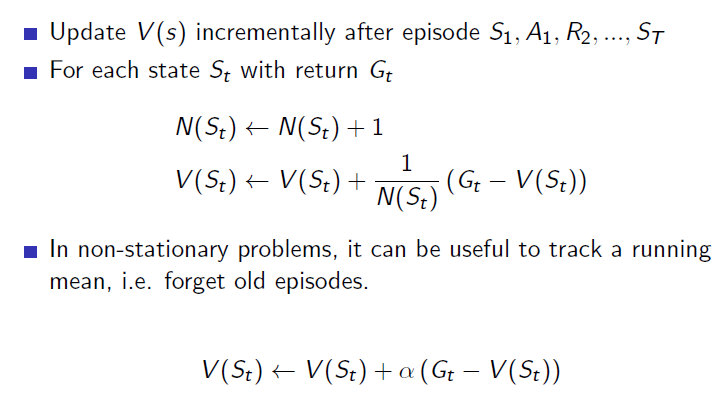

위의 평균을 취하는 식을 좀 더 발전시켜보면 다음과 같습니다. 저희가 학습하는 방법은 여러개를 모아놓고 한 번에 평균을 취하는 것이 아니고 하나 하나 더해가며 평균을 계산하기 때문에 아래과 같은 Incremental Mean의 식으로 표현할 수 있습니다.

이 Incremental Mean을 위의 First-visit MC에 적용시키면 아래와 같습니다. 같은 식을 다르게 표현한 것입니다. 이 때, 분수로 가있는 N(St)가 점점 무한대로 가게 되는데 이를 알파로 고정시켜놓으면 효과적으로 평균을 취할 수 있게 됩니다. 맨 처음 정보들에 대해서 가중치를 덜 주는 형태라고 보시면 될 것 같습니다. (Complementary filter에 대해서 아시는 분은 이해가 쉬울 것 같습니다) 이와 같이 하는 이유는 강화학습이 stationary problem이 아니기 때문입니다. 매 episode마다 새로운 policy를 사용하기 때문에(아직 policy의 update에 대해서는 이야기하지 않았습니다) non-stationary problem이므로 update하는 상수를 일정하게 고정하는 것입니다.

5. Backup Diagram

이러한 MC의 backup과정을 그림으로 나타내면 아래과 같습니다.

DP의 backup diagram에서는 one step만 표시한 것에 비해서 MC에서는 terminal state까지 쭉 이어집니다. 또한 DP에서는 one-step backup에서 그 다음으로 가능한 모든 state들로 가지가 뻗었었는데 MC에서는 sampling을 하기 때문에 하나의 가지로 terminal state까지 가게됩니다.

Monte-Carlo는 처음에 random process를 포함한 방법이라고 말했었는데 episode마다 update하기 때문에 처음 시작이 어디었냐에 따라서 또한 같은 state에서 왼쪽으로 가냐, 오른 쪽으로 가냐에 따라서 전혀 다른 experience가 됩니다. 이러한 random한 요소를 포함하고 있어서 MC는 variance가 높습니다. 대신에 random인만큼 어딘가에 치우치는 경향은 적어서 bias는 낮은 편입니다.

6. Example

Silver교수님 강의에서는 Blackjack 게임을 Monte-Carlo policy evaluation의 예제로 설명합니다. 저에게는 블랙잭 자체의 룰을 몰라서 이 예제가 어렵게 다가왔기 때문에 예제 자체에 대해서는 설명하지 않겠습니다. 게임의 설정은 아래와 같습니다.

이와 같은 게임에서 policy를 정해놓고 계속 computer로 시뮬레이션을 돌리면서 얻은 reward들로 sample return을 구하고 그 return들을 incrementally mean을 취하면 아래과 같은 그래프로 표현할 수 있습니다.

Ace의 설정에 따라서 위 아래로 나뉘는 데 위의 두 개의 그래프만 보도록 하겠습니다. policy는 그래프 아래에 설정되어 있습니다. state는 x,y 2차원으로 표현되며 z축은 각 state의 value function이 됩니다. episode를 지남에 따라 점차 어떠한 모양으로 수렴하는 것을 볼 수 있습니다. 500,000번쯤 게임을 play했을 경우에 거의 수렴한 것을 볼 수 있습니다. 앞으로 할 Control에서는 이 value function을 토대로 policy를 update하게 됩니다. 그래서 어떤 것이 좋은 policy인지 비교가 가능해집니다.

Last updated